Training uv texture maps with Flux

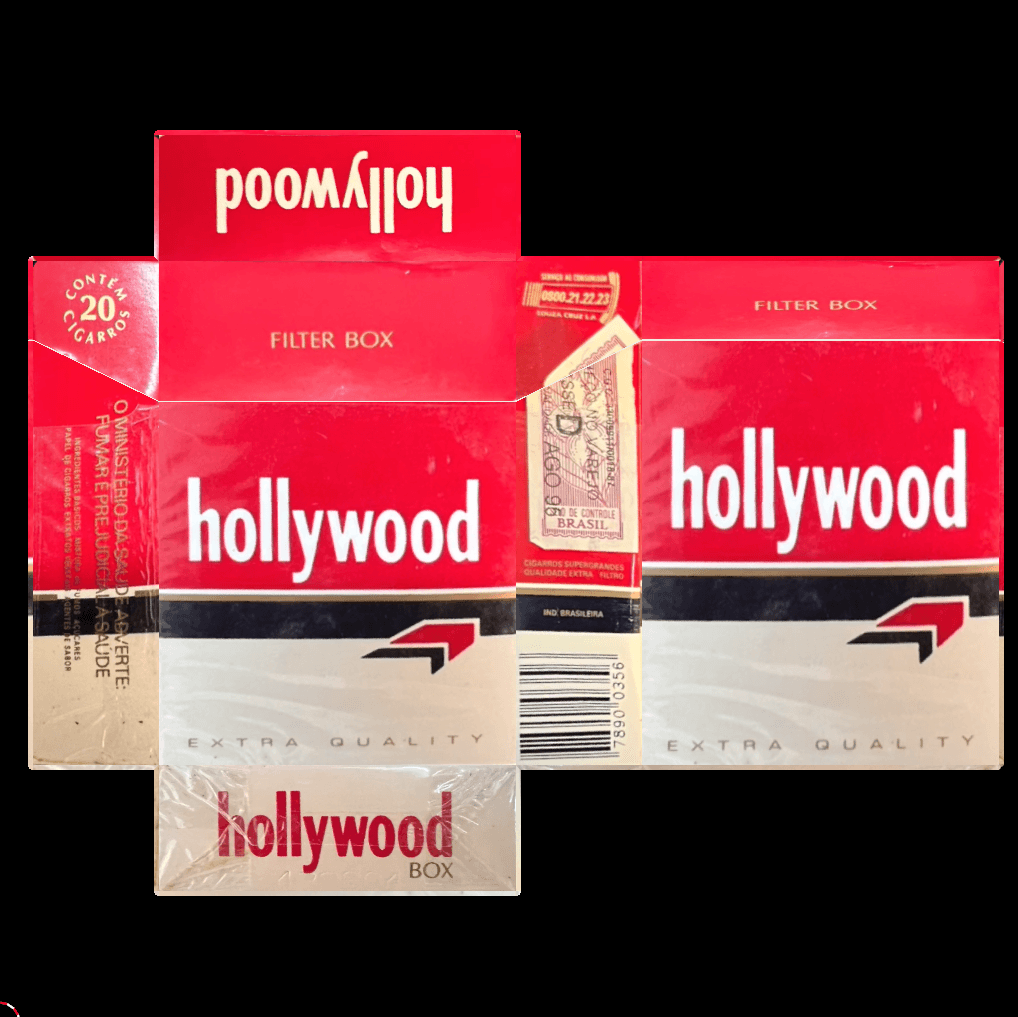

I found some cool cigarette packs in the wild 🗗 and wanted to train a Flux LoRA on them so that I could generate new designs. The problem is they are all in different view arrangements.

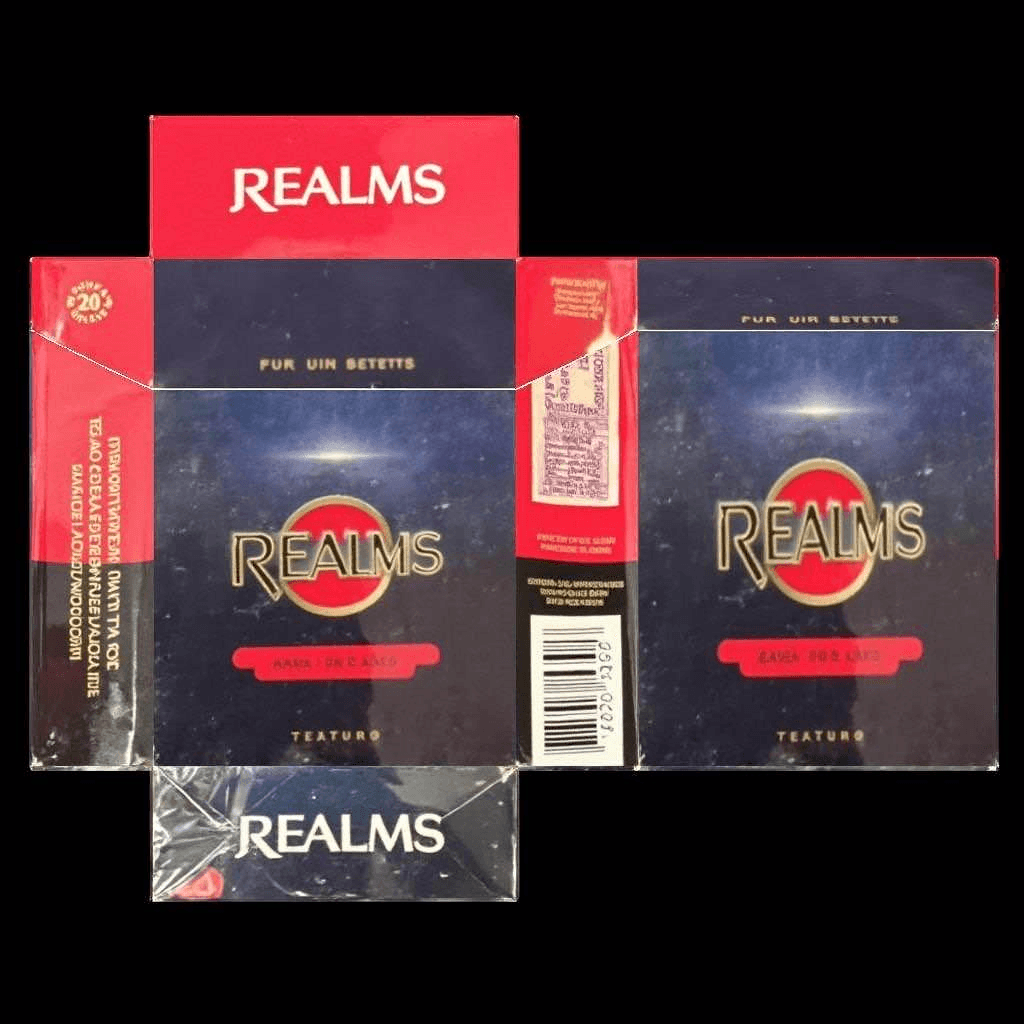

So I had an idea: first project them all to the same uv layout and then train a LoRA on them. Would this work? Would Flux maintain the uv layout and know where to put text and info?

Step 1: projecting all samples to the same uv layout.

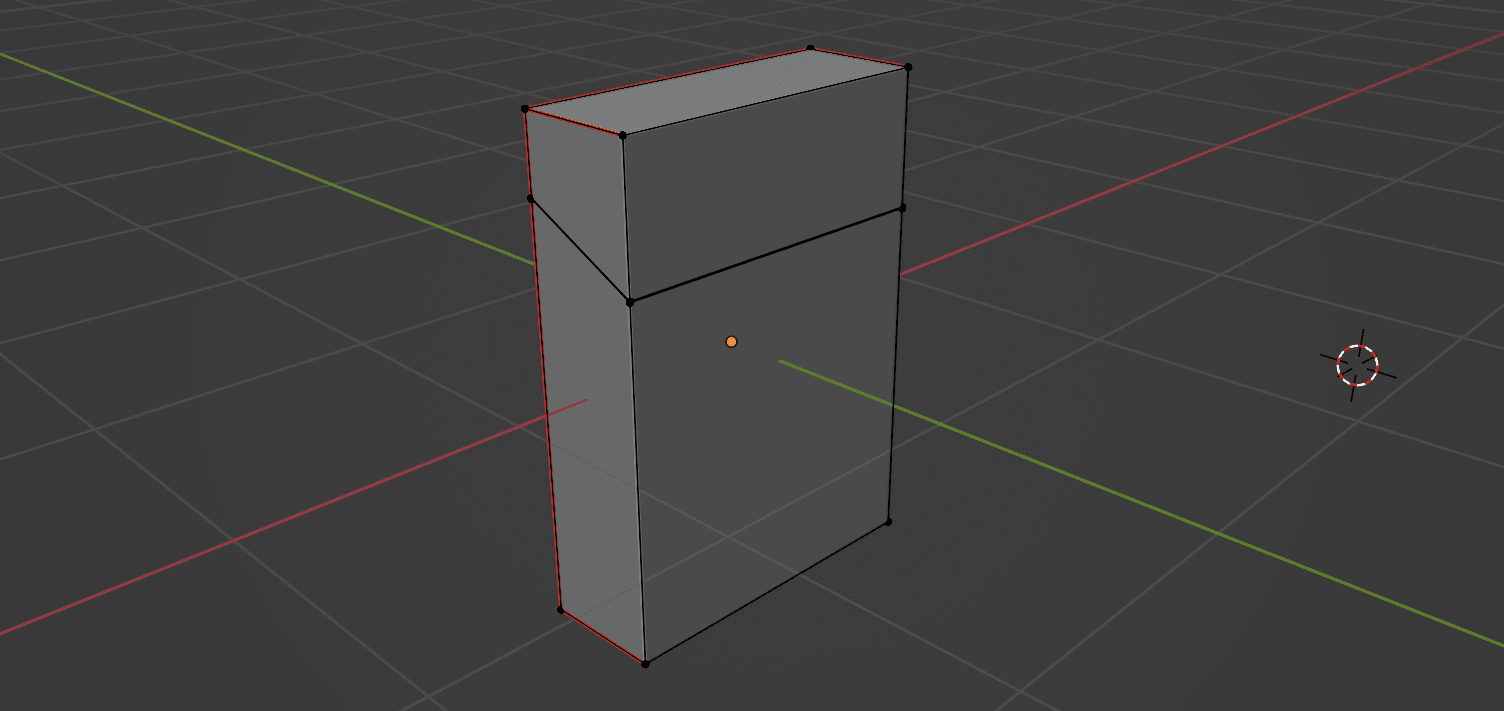

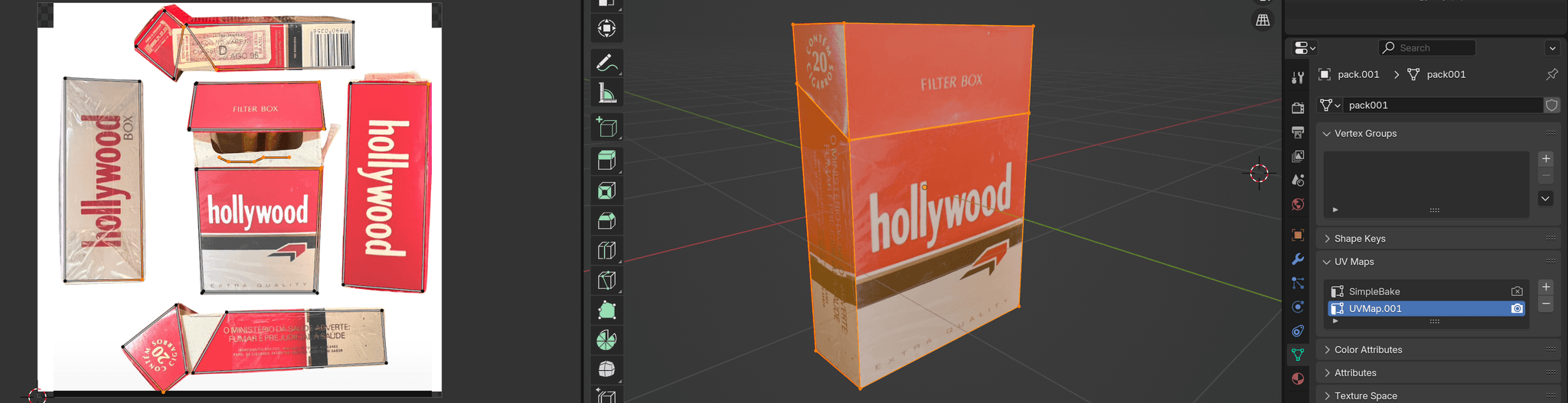

In blender, I created the following model:

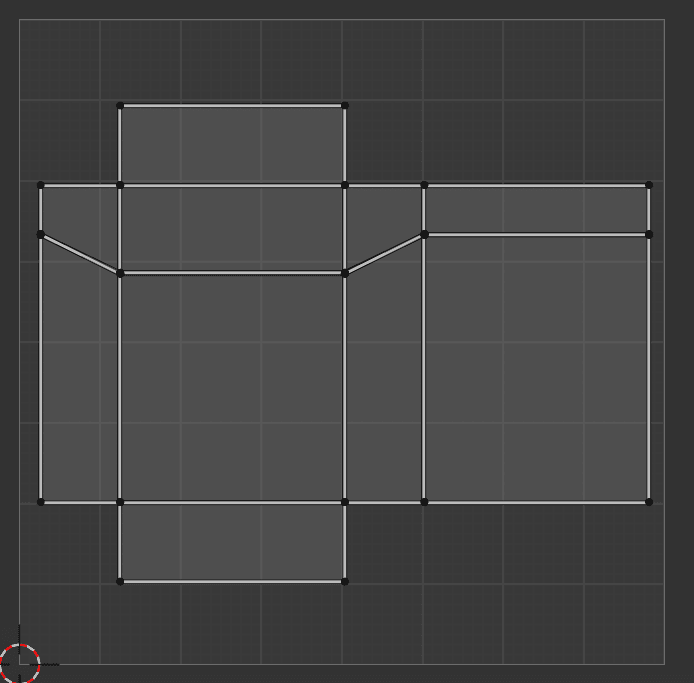

The red edges indicate uv seams. They tell blender where it can cut the texture when unwrapping it. The unwrapped uv map looks like this:

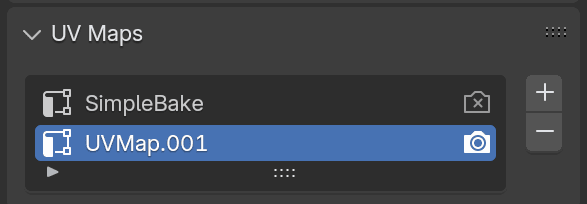

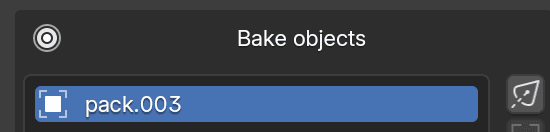

I named the target uv map "SimpleBake", because I will be using the SimpleBake plugin and it will automatically search for uv maps named like this. Then, I created a second uv map and activated it by pressing the render icon.

The second map will contain a specific uv map that is different for each sample.

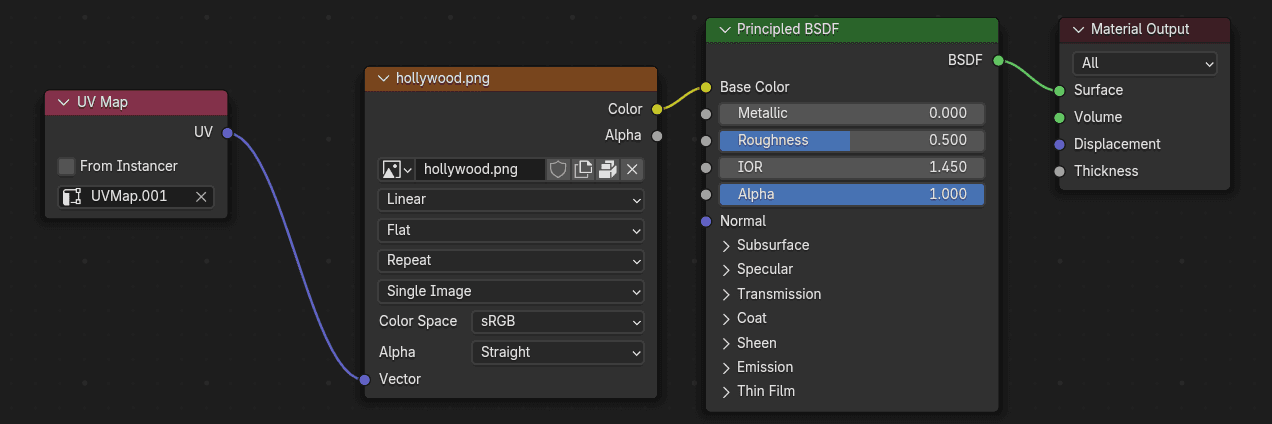

Before you see anything, you also need to set up the material using the specific uv map:

Using the second uv map and the material, we can now load a sample photo and we can start to drag the uv faces on the left to the right spots. You have to eyeball it a bit and keep checking the result on the right all faces are mapped correctly.

And now the trick: we will bake the second uv map to the first uv map called SimpleBake. As mentioned before, I'll be using the SimpleBake plugin.

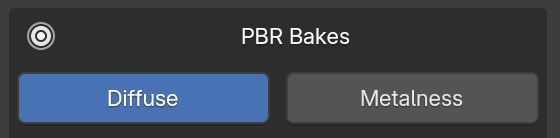

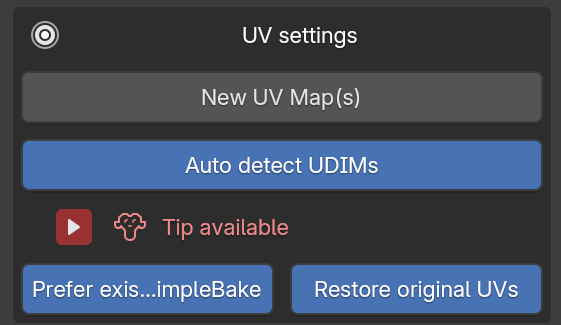

The settings are:

The cool thing is that this will be super fast since SimpleBake can use the Eevee renderer to bake objects.

And voila! I have now a transformed uv map:

Rinse and repeat this for all training samples and kickstart a Flux LoRA.

Step 2: Training a Flux LoRA

I used Ostris/ai-toolkit to train the flux LoRA, using pretty default settings. Here are some results:

Step 3: Putting them on a new model

This is a quick python endpoint to swap textures and then serve the glb file. This is the only way I could get the textures to work on a new model in a live browser runtime. (I needed this to display on the fly in a glif.app artifact.)

from pathlib import Path

from gltflib import GLTF

import shutil

DONOR_PATH = Path("./data/cigs.gltf")

def get_glb_with_texture(new_texture: Path):

gltf = GLTF.load(DONOR_PATH) # load method auto detects based on extension

shutil.copy(new_texture, "./data/pack_001_Bake1_PBR_Diffuse.jpg")

return gltf

def save_glb(gltf: GLTF, path: Path):

gltf.export(path)

if __name__ == "__main__":

glb = get_glb_with_texture(Path("tests/GdJXYGJWcAA2yR_.jpeg"))

save_glb(glb, Path("tests/cigs_with_texture.glb"))